Extra note: I appreciate that model collapse in practice doesn’t really occur, because of curated datasets and the effort that does into ensuring good training data. But indulge me.

This post likely doesn’t read well, or even make any sense. I would reccommend reading the archived version first for a full explanation. Click here.

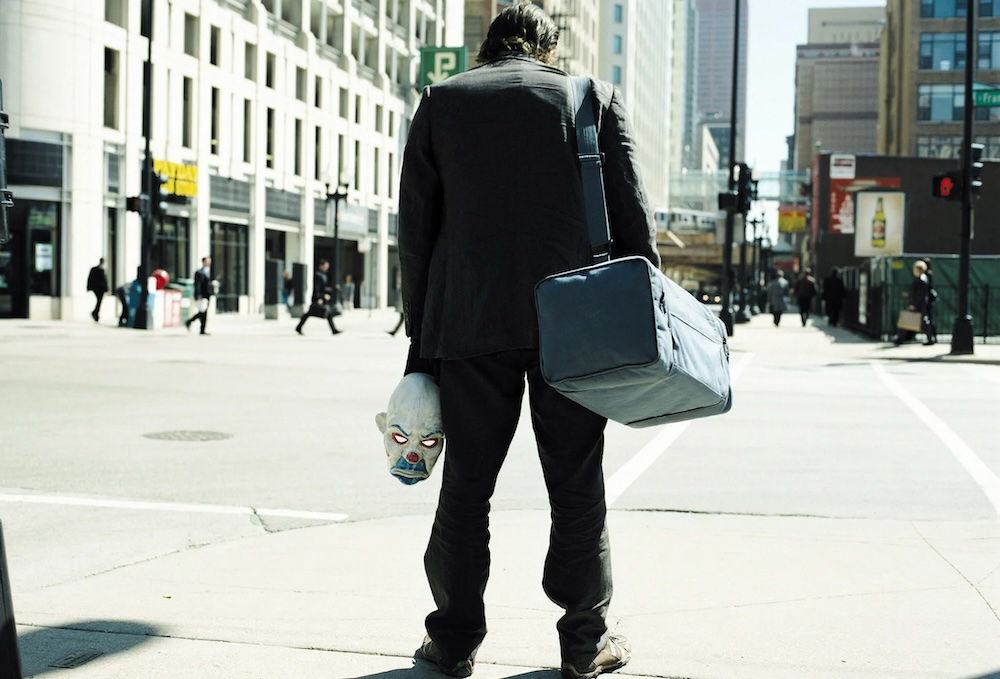

This post is rotting, and will soon become AI slop. What follows is a love letter to an internet that will never exist again, it is a self-fulfilling obituary. Ashes to ashes, dust to dust, slop… to slop.

The dead content theory states that sooner rather than later the content on the internet will be in the background and the majority of traffic will be when the content cannot be accessed by bot ; traffic will be accessed only by the bots. Every time it becomes less of a problem with dead content - generally malicious content ;

In the beginning was the Word, and then the next Word and the next Word, and then after enough words, someone claimed that a large-language model could think, and the Word was God1.

1 John 1:1, creatively embellished, some would say blasphemously.

Content traditionally produced by marketers, copywrighters, and journalists is slowly being replaced with generative content from LLMs. But what happens when the next-generation of LLMs are being trained on this pseudo-data from the internet?

The equivalent of the dead internet theory for LLMs, model-collapse illustrates a scenario where datasets become so poisoned that it becomes impossible to ever train a new LLM effectively2.

2 Why AI companies enter into multi-million pound contracts with news organisations with a wealth of verifiably human, proofread and well written content.

Like pre-trinity test low background radiation steel3, pre-large-language model content will become sought after. Humanity will see a return to the handwritten word, literature originating before chatGPT will be considered sacred, evidence of genuine human achievement.

And here, we, go…4

4

In London there is a Raspberry Pi running a cronjob. It has access to the source code to this very post and is also loaded with a small local BERT-based model5.

5 Specifically, google-bert/bert-base-uncased

Twice a day, a random sentence from this post will be selected, and a random word will be omitted. The small, local language model will be them prompted to infer the missing word. This word will be replaced, my site re-rendered, the changes committed to the Git repository, and reflected here6. Additionally I will prompt the model to add a word a day to the bottom of this post.

6 Stunning way to increase my Github contributions.

View Script & LLM Inference Code

#!/bin/bash

source mini_llama_env/bin/activate

python script.py

cd ../website

quarto render

git add -A

git commit -m "post continues to rot"

git push from transformers import pipeline

import numpy as np

import time

def process_file(filename):

# Read file content

with open(filename, 'r') as file:

data = file.readlines()

# Find lines with 'changeable_text'

changeable_lines = []

for i in range(len(data)):

if '''FLAG''' in data[i] :

changeable_lines.append(i+1)

break

random_line = np.random.choice(changeable_lines)

text = data[random_line]

# Process footnote if present

if '^[' in text:

footnote_index = text.index('^[')

end_footnote = text.index('\\x00]')

pre_footnote, post_footnote = text.split('^[')[0], text.split("\\x00]")[1]

footnote = text[footnote_index:end_footnote+5]

footnote_word_index = text[:footnote_index].count(' ')

print('FOOTNOTE WORD INDEX:', footnote_word_index)

data[random_line] = pre_footnote + post_footnote

# Replace random word with [MASK]

line = data[random_line].split()

random_word_index = np.random.randint(0, len(line))

print('REMOVED WORD', line[random_word_index])

rem_word_store = line[random_word_index]

line[random_word_index] = '[MASK]'

line = ' '.join(line)

# Use BERT to fill [MASK]

unmasker = pipeline('fill-mask', model='bert-base-uncased')

res = unmasker(line)

res.append({'token_str': rem_word_store})

word_index = 0

while res[word_index]['token_str'] in ['.', ',', '!', '?']:

word_index += 1

replacement_word = res[word_index]['token_str']

print('REPLACEMENT WORD:', replacement_word)

line = line.replace('[MASK]', replacement_word)

# Reinsert footnote if it existed

if 'footnote' in locals():

line_words = line.split()

line_words.insert(footnote_word_index, footnote)

line = ' '.join(line_words) + '\n'

line = line.replace(':::', '\n:::\n')

data[random_line] = line+'\n'

# Process 'extra' lines

extra_lines = [i+1 for i, line in enumerate(data) if 'FLAG' in line]

if extra_lines:

generated = data[extra_lines[0]].strip()

hypothesised = generated + ' [MASK]' + '. END OF STATEMENT.'

res = unmasker(hypothesised)

print('ADDED WORD:', res[0]['token_str'])

data[extra_lines[0]] = f"{generated} {res[0]['token_str']}\n"

# Update timestamp

utc_str = time.asctime(time.gmtime())

print('UTC:', utc_str)

data[-1] = f'''Updated: {utc_str}. Replaced {rem_word_store} with {replacement_word}. Added {res[0]['token_str']} to the end of the generated text.'''

# Write updated content back to file

with open(filename, 'w') as file:

file.writelines(data)

process_file('../website/posts/dead/index.qmd')Token to token, slop to slop, all good things must come to an end.

Slop

I the one left out there said no more words then left him speechless again . 1 pm est and then he left again again . 1 pm est est est

Updated: Sun Oct 13 20:03:07 2024. Replaced be with be. Added est to the end of the generated text.